When it comes to Talent Acquisition, AI is now infrastructure. Not “someday” infrastructure. Not “early adopter” infrastructure. Real, everyday infrastructure.

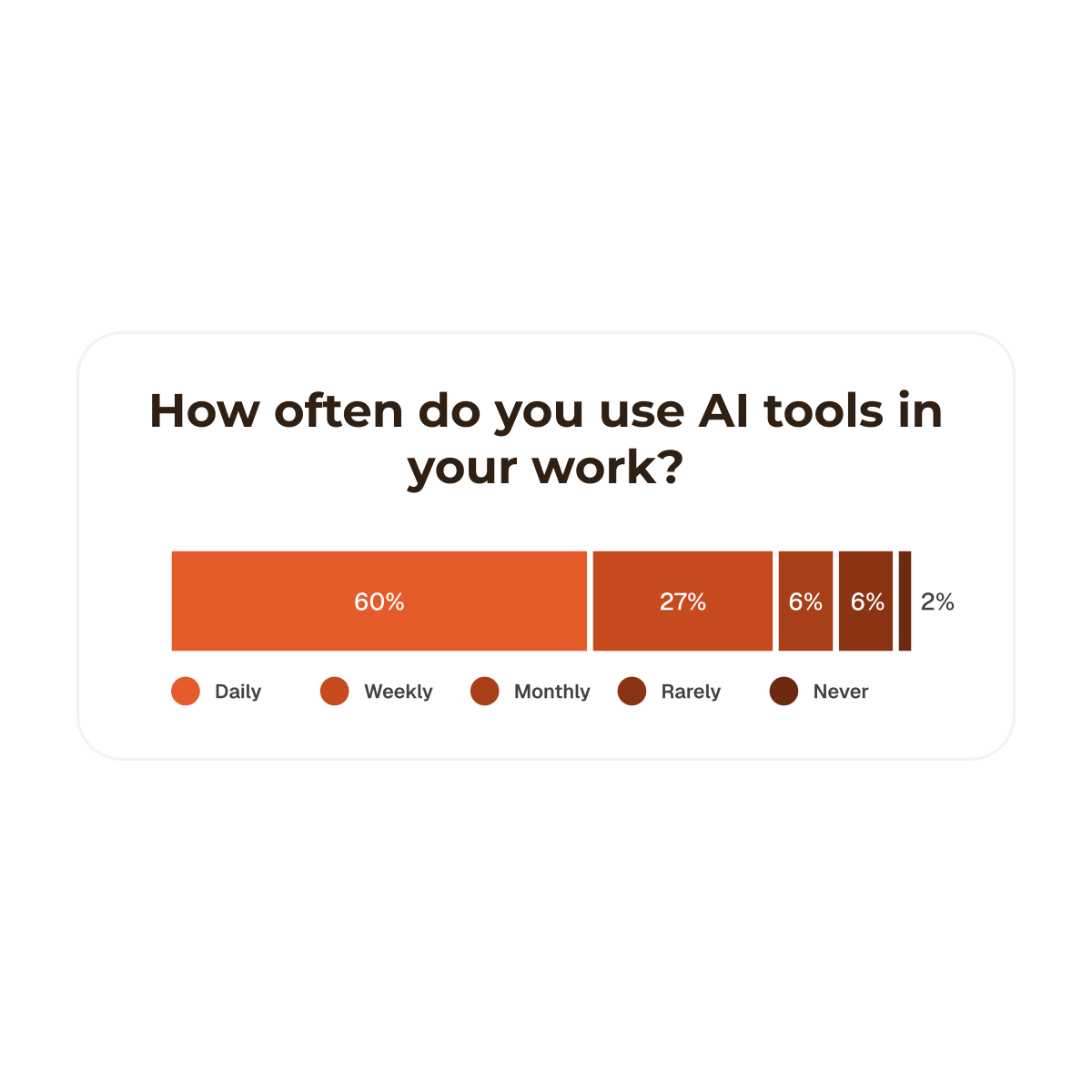

In our 2025 benchmark study in partnership with Elly, most respondents told us they use AI daily or weekly. That's a clear market signal. Put simply, AI is now a core part of how recruiting work happens.

But beneath that momentum sits a harder truth: AI is widely adopted but not widely trusted.

The next wave of TA AI isn't about more tools. Rather, it's about trust, integration, and governance. The teams that win won't be the loudest AI adopters. They'll be the most intentional ones.

If you want the full benchmark data, charts, and breakdowns, download the full report here: AI in Talent Acquisition 2025

Let’s set the stage. TA is under pressure in a way that feels… structural.

Applications are up. Expectations for speed and personalization are up. Meanwhile, fraud and low-quality applicants are rising, and recruiter capacity is not magically expanding to match it.

In that environment, AI starts to look less like a shiny object and more like a life raft.

That shows up clearly in the data:

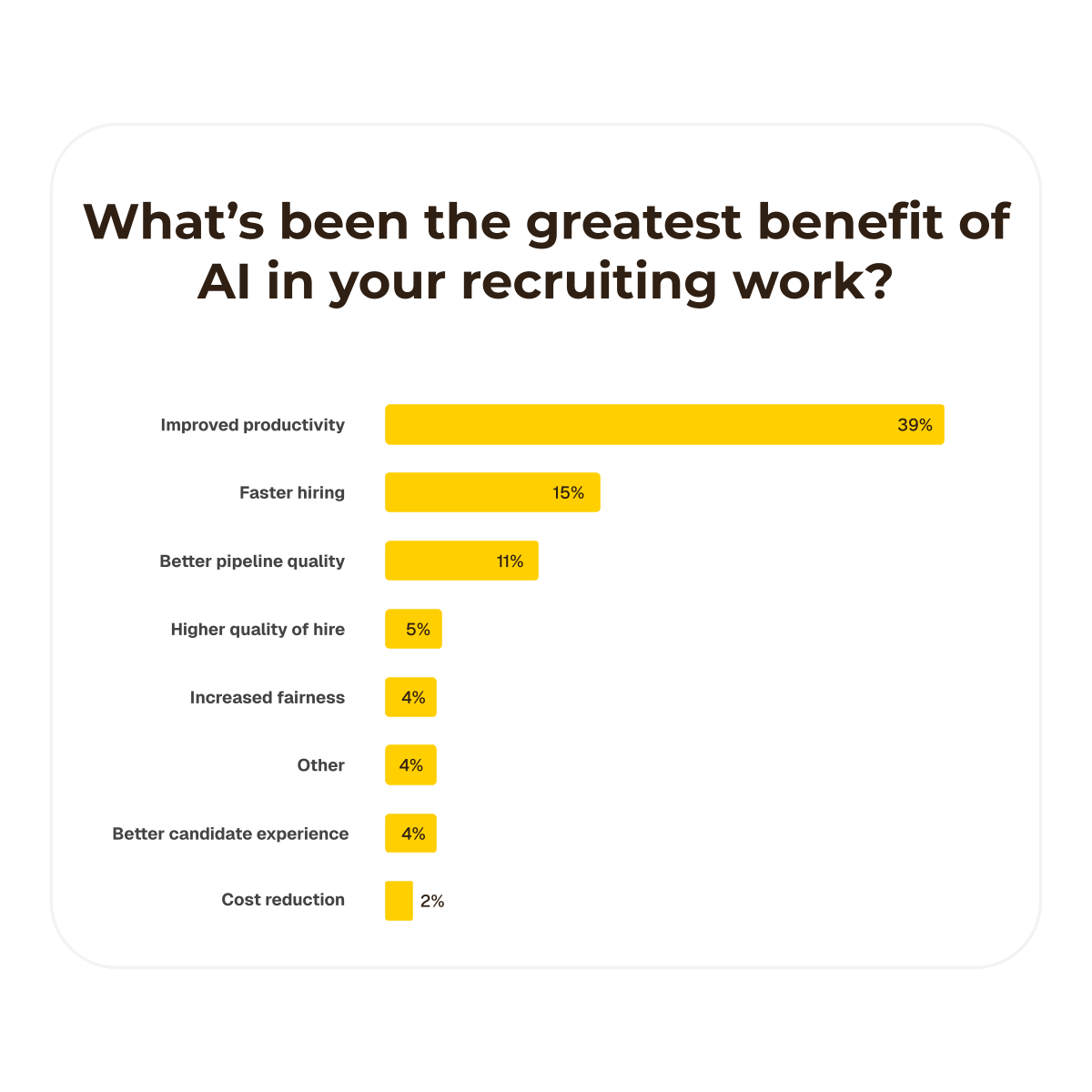

And when you ask talent teams what they're getting out of AI, the answer is refreshingly practical.

The top benefits aren't “revolutionizing work” or “replacing recruiters.”

They top answers are:

Translation: AI is helping teams keep up with their workloads and move faster through repetitive workflows

Here’s the part most people keep skipping past when discussing AI. Yes, productivity gains are real. But trust is still wobbly.

Across our study's responses, the most common frustrations cluster around the same themes:

One respondent highlighted:

“AI tools sometimes sound very sure even when they’re inaccurate. That makes people double-check everything, which reduces trust.”

Ever run into the same issue?

That's essentially the “verification tax” in one sentence. AI can speed things up, but if you have to double-check everything, the value starts to leak out of the system. Another respondent captured the screening risk that keeps TA leaders up at night:

“When resumes do not follow a standard structure or when transferable skills are not explicitly stated, strong candidates get overlooked because the AI filters out profiles that do not match exact keywords.”

That's the hiring funnel quietly eating your best candidates. And then there is the most important line in the sand TA teams are drawing:

“The recruitment process should always be conducted by a human. AI should be a backup tool, not a primary source of information.”

AI is welcome as an assistant. It's still under scrutiny as an evaluator and probably will be for a long while.

When you look at where AI shows up most often, it's not surprising. Adoption is concentrated in the front half of the funnel, where volume is high and workflows are repetitive.

In our survey, the most common use cases include:

These are the places where speed matters, and where AI can remove the most administrative drag.

More advanced use cases like AI-driven interviewing or assessments are far less common. That's where risk, bias concerns, and candidate experience concerns collide. Teams are experimenting, but most are doing it cautiously.

This leads to one of the most useful lenses from the report, which we'll get to next.

Not all AI in recruiting is created equal. The market is starting to separate AI into two buckets:

Tools that help you move faster without directly making hiring decisions:

This category tends to be adopted quickly because the risk feels manageable. If the tool drafts a message that sounds slightly robotic, a human can fix it. If it misses a scheduling nuance, you can override it.

Tools that influence outcomes and selection decisions:

This is where trust drops and scrutiny rises. Because the cost of being wrong isn't just a minor inconvenience. Being wrong here means:

Right now, TA teams are telling the market: “We want help with volume. We're not ready to outsource judgment.”

If you want to know which teams will scale AI successfully, look past the tools and look at governance. The pattern is in our study was simple: teams with formal policies report higher confidence and smoother adoption.

Policy doesn't magically makes AI better, but it addresses some key issues like clarifying what's allowed, reduces shadow IT, aligns TA with other teams (mostly legal, security, and IT), and creates consistency across teams and workflows. It's about standardization and workflow control. If you don't govern AI, AI will govern you.

Usually that takes the form of tool sprawl, inconsistent usage, and risk you can't explain when someone asks the hard questions.

If you've ever thought, “Why do we have five tools that all kind of do the same thing,” you're not alone.

One of the most consistent pain points in the open responses is fragmentation. Teams are trying to stitch together sourcing tools, note-taking tools, scheduling tools, compliance workflows, and ATS systems that do not talk to each other.

That creates:

"We use Gemini for notetaking… but those don’t sync with Greenhouse, so we have to manually upload. It’s double work.”

Yikes, that's the opposite of ROI.

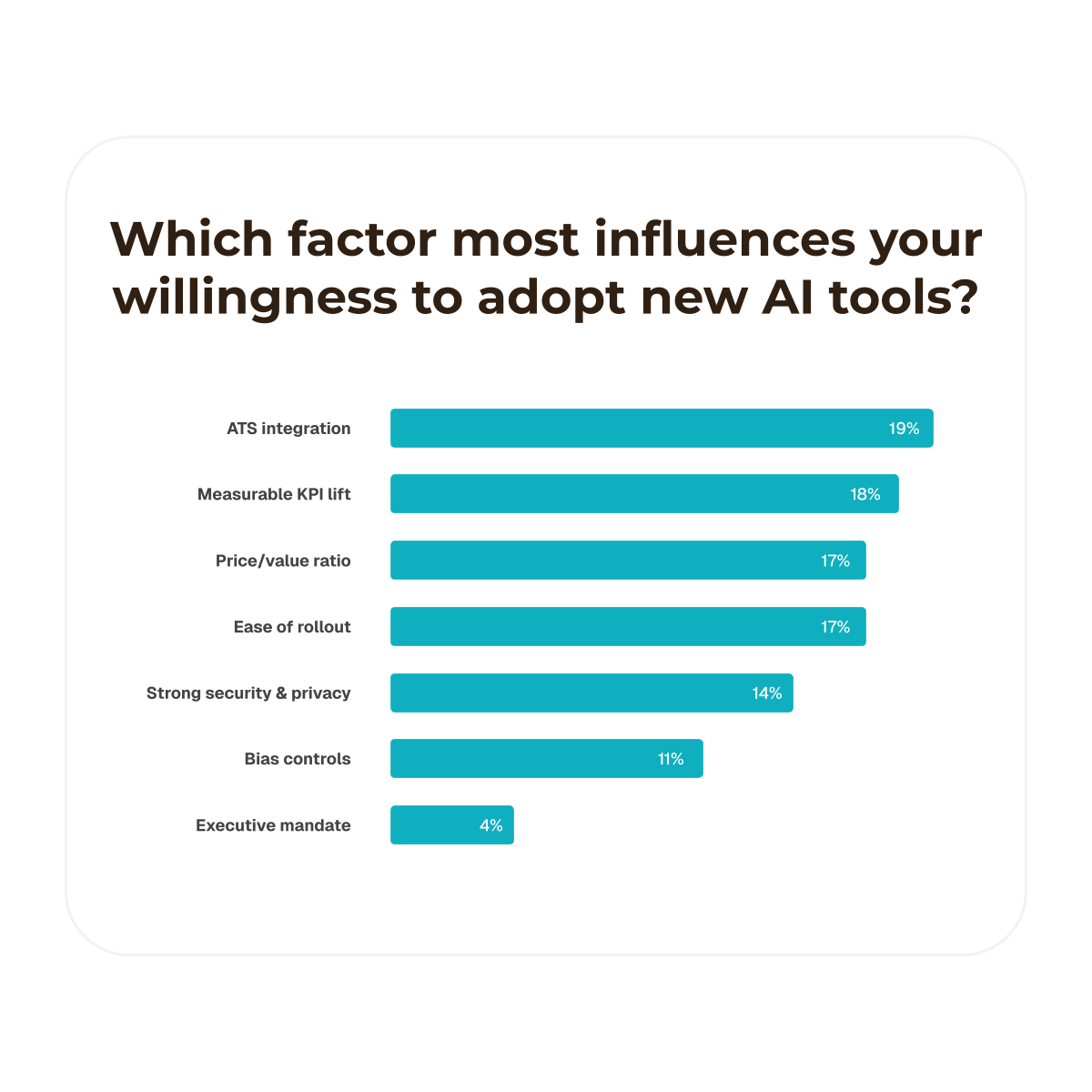

And this is why ATS integration shows up as a top adoption driver in the quantitative data. People don't want “more AI.” They want AI that fits into the systems they already live in.

There's a theme in the study that deserves to be taken very seriously: fake candidates.

Not “a few weird resumes.” Not “one scammy application.” A broader shift where AI has made it easier for candidates (and bad actors) to generate believable materials at scale.

Talent teams are feeling it, and they want tools that can keep up. One of the most common “magic wand” requests was some version of:

"Something around fraudulent candidates is still my number one issue.”

We're in a new age of TA, and AI is not only enabling recruiters. It's enabling applicants too, and not always in good ways.

If you haven't already, take a look at Kristen Habacht's post to get some more empathy:

If all you read about AI is hype, you might assume the market is either euphoric or panicked. In reality, it's practical.

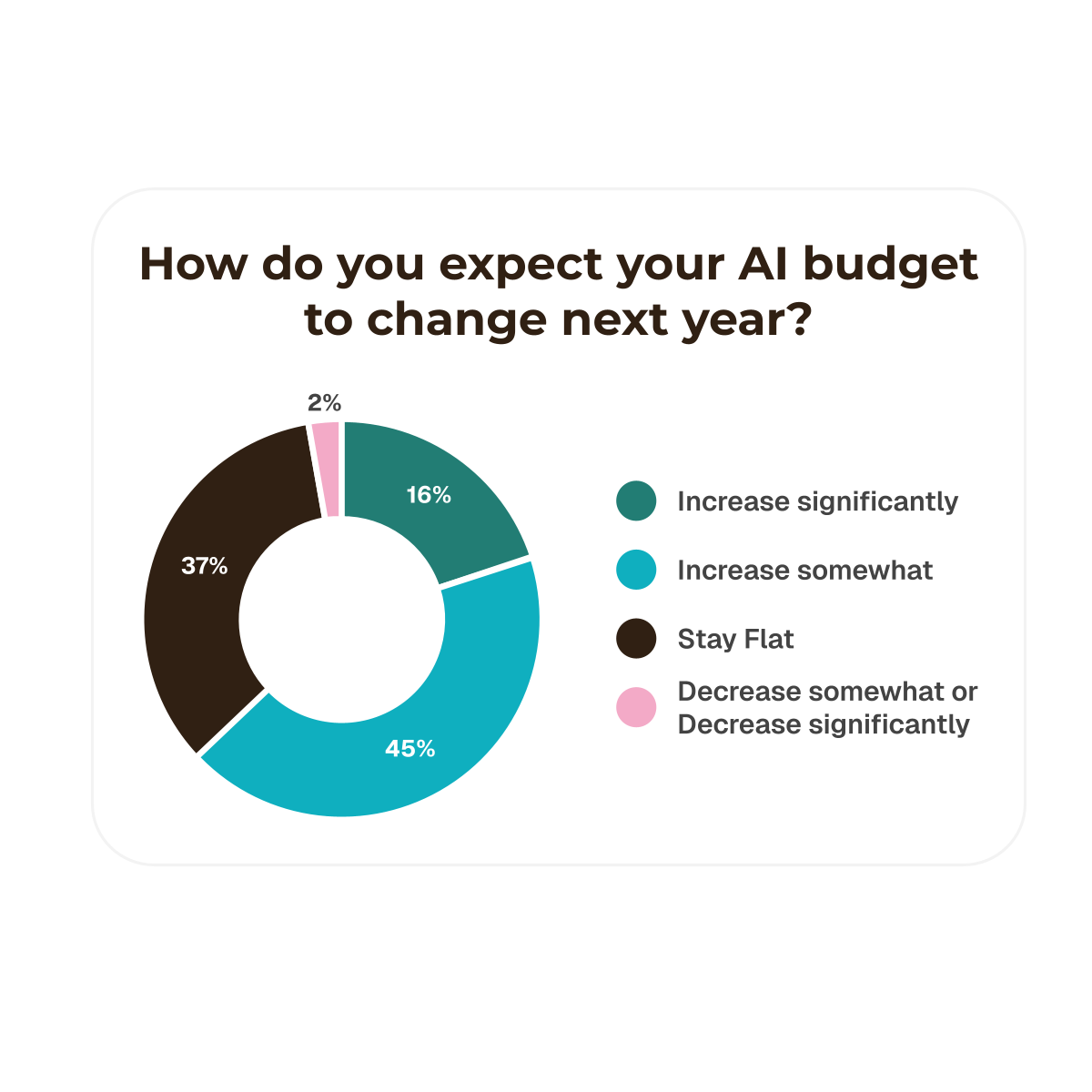

Most surveyrespondents predict AI will have a somewhat positive or very positive impact over the next 12 months. At the same time, budgets are holding strong. Most organizations expect AI budgets to increase or stay flat, with very, very few expecting decreases.

Confidence and investment track together. Teams that feel more confident using AI responsibly are more likely to expect budgets to rise. This points to an important truth: the future of AI spend in TA will be governed by trust, not novelty.

And when you ask teams what they want next, the answers aren't unreasonable:

The market is asking for systems that work under real hiring pressure.

If you are leading TA in 2025, you do not need another generic “AI strategy.” You need a few disciplined moves that create real leverage.

Here is what the data suggests matters most:

Policies are not paperwork. They are the foundation for confident adoption. Define what tools are approved, how data can be used, and where human review is required.

Tool sprawl will eat your ROI. Choose fewer tools that integrate directly with your ATS and core workflows.

Operational AI should be fully embraced. Judgment AI should be supervised, validated, and treated as high-stakes.

Confidence improves when people know what AI can do, what it cannot do, and how to prompt and QA outputs effectively.

These are not “nice-to-have features.” They are the factors that will determine whether AI improves trust in hiring or erodes it.

This article is the story. The report is the evidence.

The full report includes:

You can download the full “AI in Talent Acquisition 2025” report here: AI in Talent Acquisition 2025.

If you're trying to make AI work inside your TA function without losing trust, fairness, or control, the benchmark will help you see exactly where you stand and what to do next. We also invite you to take a look at our report partner Elly's breakdown here: AI in Talent Acquisition: 2025 Report on Adoption and Trust.

Elly streamlines hiring with AI tools that handle sourcing, interviewing, notetaking, and scheduling. Recruiters get cleaner pipelines, faster shortlists, and more time to focus on candidates, not admin work.